How Proptech Is Revolutionising Real Estate

Real estate, the world’s largest asset class, valued at a staggering $7.56 trillion, has long been a sleeping giant when it comes to technological innovation. But now, it’s waking up. Recent years have witnessed an unprecedented surge in proptech.

What is Proptech?

PropTech is short for Property Technology which, as its name suggests, is the dynamic intersection of property and technology.

Broadly, it refers to the innovative use of technology in the real estate industry and covers a wide range of tech solutions and innovations aimed at disrupting and digitising various aspects of the real estate sector, including property management, leasing, sales, construction, investment and others.

Proptech tackles key issues in how we use and benefit from real estate. It’s already streamlining processes and transactions, creating new opportunities, addressing pain points, cutting costs, enhancing connectivity, productivity and boosting convenience for residents, owners, landlords and other stakeholders.

Why the Surge in Proptech?

Several key factors have contributed to the rapid rise of proptech. The COVID-19 pandemic significantly accelerated the need for virtual, no-touch experiences, driving technological innovation across the sector.

Technological advancements with practical applications in real estate have also played a crucial role. Examples of innovations include:

- Virtual Reality (VR) and Augmented Reality (AR) enhancing property viewing experiences.

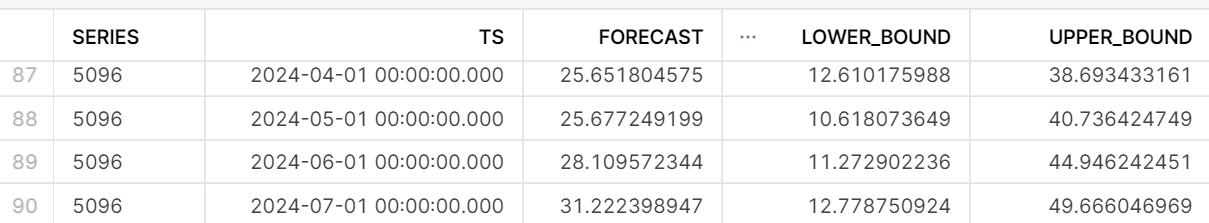

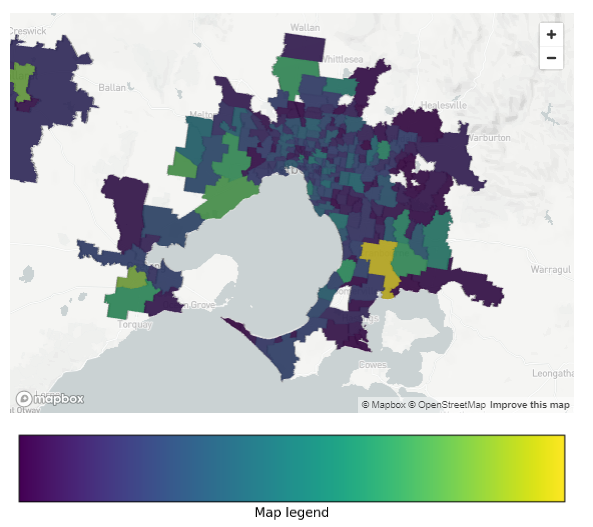

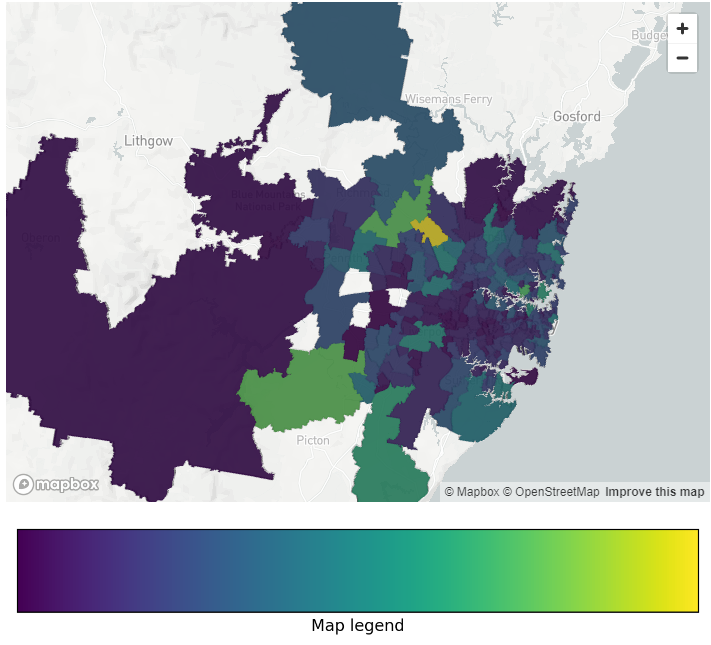

- Artificial Intelligence (AI) and Machine Learning (ML) providing data-driven insights and personalised recommendations.

- Internet of Things (IoT) enabling smart home features and efficient property management.

- Blockchain Technology allowing fractional property ownership, offering new ways for buyers and sellers to connect and potentially cutting costs by removing intermediaries out of the transaction process.

- Drone Technology offering virtual tours and aerial views,

Increased connectivity and the availability of real estate data, have improved customer experiences and enabled faster, more informed decisions in real estate transactions, planning and development.

Regulatory changes have also revolutionised the way real estate operates.

Regulatory changes serve as a catalyst for proptech innovation. By creating new challenges and setting higher standards, regulations drive the development of advanced technologies and solutions that help businesses comply, operate more efficiently, and enhance their services. This continuous push for innovation ensures that the real estate industry evolves to meet modern demands.

The pressing issue of housing affordability has spurred creative approaches to real estate ownership and investment too. Proptech and financial technology (fintech) are democratising property investment, making it more accessible through crowdfunding platforms, fractional ownership, and Real Estate Investment Trusts (REITs).

The potential for disruption and innovation in the real estate sector has attracted significant investor interest. Corporate venture capital units and accelerator programs further support and fast-track proptech startup funding.

Proptech’s Potential to Reimagine Real Estate

Proptech has gained significant traction in recent years as real estate professionals and investors recognise the potential of technology to disrupt.

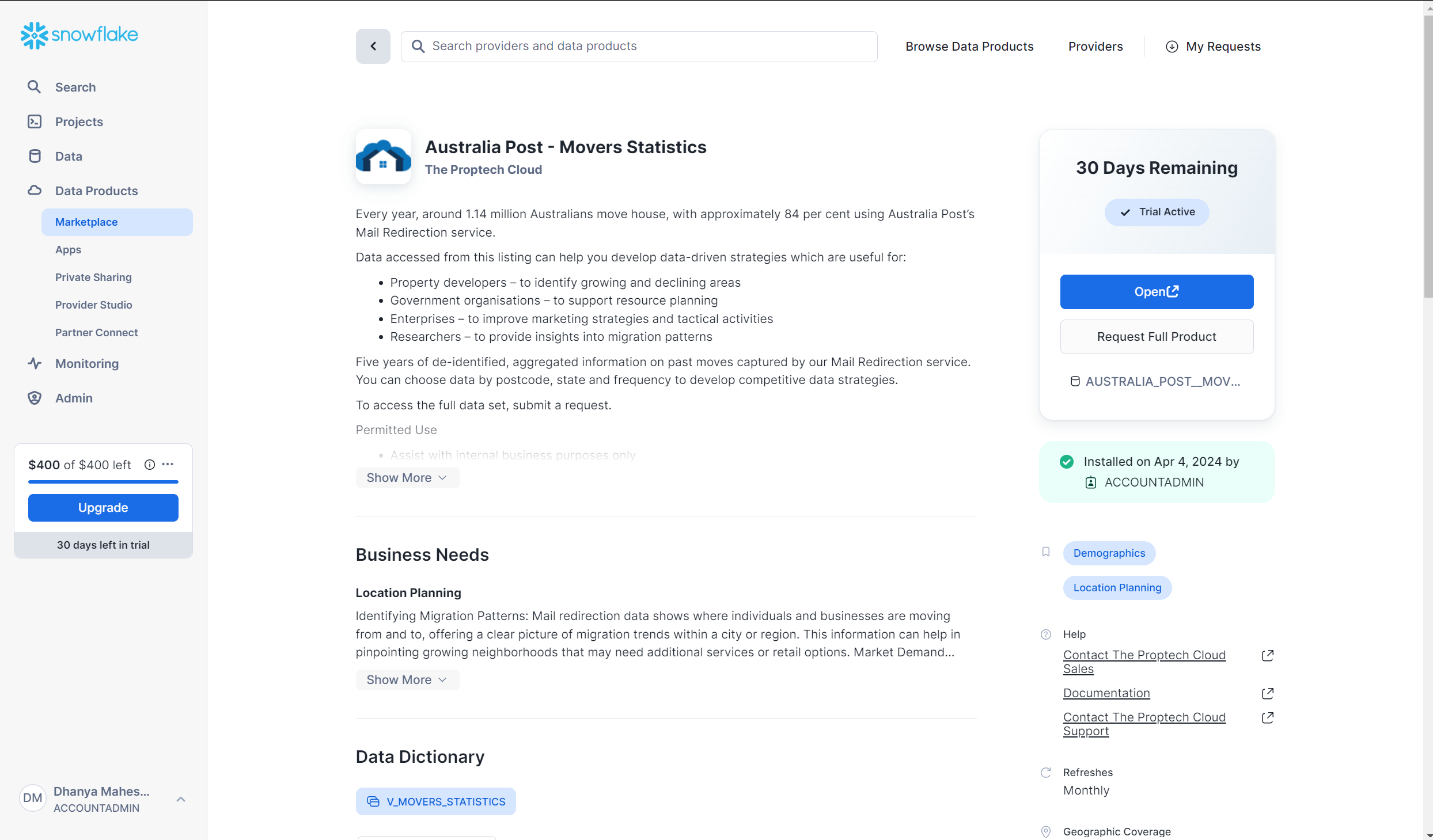

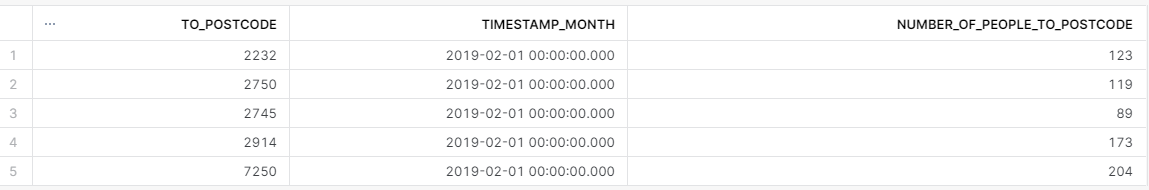

According to PropTechBuzz, hundreds of Australian proptech startups are leveraging the power of advanced technologies like big data, AI, AR and generating over $1.4 billion of direct economic output.

Yet, we are only on the cusp of proptech’s true potential.

Signs show that this fledgling industry has yet to reach its pinnacle.

A recent Deloitte survey Global Real Estate Outlook Survey of real estate owners and investors across North America, Europe, and Asia/Pacific reveals:

- Many real estate firms address years of amassed technical debt by ramping up technology capabilities. 59% of respondents say they do not have the data, processes, and internal controls necessary to comply with these regulations and expect it will take significant effort to reach compliance.

- Many real estate firms aren’t ready to meet environmental, social, and governance (ESG) regulations. 61% admit their firms’ core technology infrastructures still rely on legacy systems. However, nearly half are making efforts to modernise.

Barriers to progress still exist.

A survey of 216 Australian property companies from 2021 by the Property Council of Australia and Yardi Systems show that

- There is the perception that solutions must be specially developed or customised (34%).

- 26% of respondents see changing existing behaviours as the biggest obstacle to overcome, followed by cost (23%) and time constraints (11%).

The Future of Proptech

The future of proptech is looking bright.

As new technology, trends, and other contributing factors converge to accelerate innovation in the real estate (and its neighbouring) sectors, new ideas take flight and promise to disrupt traditional processes.

Proptech brings exciting benefits, boosting the real estate industry’s digital presence, productivity and enhancing experiences for everyone involved.

It fosters innovation and automation, adding convenience, efficiency, transparency and accuracy to administrative and operational tasks.

Additionally, proptech holds the promise of better access to data and analytics and the integration of sustainability practices.

As technology continues to advance and consumer preferences evolve, proptech is likely to play an increasingly prominent role in shaping the future of the real estate industry.

Subscribe to our newsletter

Subscribe to receive the latest blogs and data listings direct to your inbox.