Data consultancy, Data Army taps into the hidden insights of the Australia Post Movers Statistics dataset to forecast the future hotspots in Australia—predicting where people are likely to move next.

By analysing trends in mail redirection requests through the Time Series Forecasting function, we provide valuable insights with precision.

This advanced analysis is a feature of Snowflake Cortex, Snowflake’s comprehensive Artificial Intelligence and Machine Learning service, designed to empower your decisions with data-driven intelligence.

Overview of Data Used

- The primary dataset is Australia Post’s Movers Statistics currently available from The Proptech Cloud with a limited free trial. Updated monthly, it contains de-identified and aggregated data on moves across Australia based on mail redirection requests for the previous 5 years. For this exercise, we used the data from February 2019 to January 2024. Each entry in the data includes the postcode the household relocated from, the postcode the household relocated to, the month of relocation and the number of the people that relocated.

- The secondary dataset used is the Geography Boundaries & Insights – Australia, specifically Australian Bureau of Statistics (ABS) 2021 Postcode Boundary Data (ABS_POA_2021_AUST_GDA2020) to conduct geospatial visualisations. This dataset is free from The Proptech Cloud.

Australia Post Movers Statistics Data

This dataset contains five years of de-identified, aggregated information on past moves captured by Australia Post’s Mail Redirection service.

Access Australia Post mail redirect statistics now to help you develop competitive data-driven strategies.

Introduction to Snowflake Functionality & Technology Stack

- The Snowflake Forecasting Model is part of the Snowflake Cortex ML- Powered Functions. This model uses a Machine Learning algorithm to predict future trends from historical data.

- SnowPark which is the set of libraries in Snowflake which will allow us to deploy and process the data pipeline using Python

- Streamlit which is used to visualise the forecasts created using interactive Python apps. This functionality is fully integrated within the Snowflake Platform

Introduction to Snowflake’s Forecasting Model

Snowflake Cortex is a service by Snowflake which offers Machine Learning (ML) and Artificial Intelligence (AI) solutions.

This blog will focus on using the Time-Series Forecasting Model which is part of this service. The ML forecasting model used in this algorithm is Gradient Boosting (GB).

The intuition behind the GB algorithm is that the combination of multiple models that learn and improve on each other will perform better than one model. To implement this approach, the GB algorithm will firstly implement the best possible model on the dataset.

The second model will assess where the first model performs poorly and try to improve on these areas. This process continues and the models continue to learn and iterate on one another until the model iteration process no longer improves the model outcomes and therefore the optimal model combination is found and used for predictions.

The GB algorithm is very popular due to its ability to learn from itself and it performs very strongly in many different ML problems. One of the typical challenges in using the GB model is that it is usually very computationally expensive and time consuming to iterate and find the optimal model. The advantage of using the Snowflake Cortex Machine Learning Powered Forecasting Model is that it is extremely quick to compute on even very large datasets as it leverages Snowflake’s existing highly scalable infrastructure.

Technical How-To Guide

The forecasting model example shown will use Snowpark to create the data pipeline and use Streamlit for the data visualisations.

Step 1: Accessing the data

- Go to the link on the listing to access the Australia Post – Movers Statistics Data.

- Click the “Access Data Listing on Snowflake Marketplace” button to access the data listing on the Snowflake Marketplace

- Click the ‘Get’ button and the top right of the page to access the Australian Post Movers Statistics dataset. This will then redirect to a link to either create a new Snowflake account or sign in if one already exists.

- Once your Snowflake account is set up and running , the Australia post Mover Statistics dataset listing is located within Data Products and then Marketplace as shown by the link below:

5. Click on the Open button to explore the sample data within the Snowflake Environment. This will redirect to a Snowflake worksheet which will show some sample queries.

6. The full product can also be requested from the Marketplace page with the button ‘Request Full Product’ if access to the entire dataset is needed.

Step 2: Setting Up the Example Data

The forecasting model created is a multi-time-series model. The following types of variables were needed to create this:

- The series variable to create multiple time series forecasting

- A timestamp column

- A target column which includes a quantity of interest at each timestamp

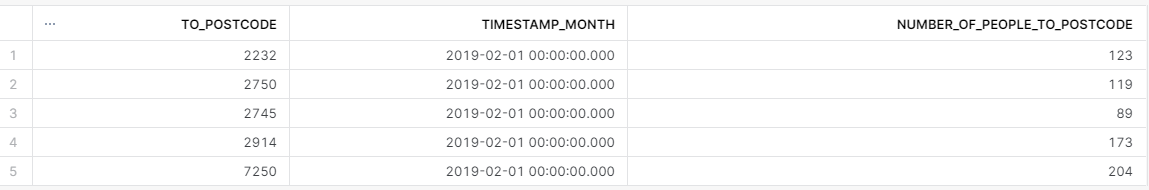

The cleaning and transformation of the dataset to prep the data for forecasted was completed by running SQL queries using Snowpark. A sample of this data is shown below:

migration_people_moving_to to use in the forecasting model.Step 3: Creating the Forecasting Model

Each row in the migration_people_moving_to view corresponds to the three types of columns needed to create a multi-series forecasting model; the postcode (series column), month (timestamp column) and the number of people who moved into the postcode that month (the target column)

The code to create a forecasting model is as follows:

CREATE OR REPLACE SNOWFLAKE.ML.FORECAST forecasting_model_people_to (

INPUT_DATA => SYSTEM$REFERENCE(‘VIEW’, ‘migration_people_moving_to’),

SERIES_COLNAME => ‘to_postcode’,

TIMESTAMP_COLNAME => ‘timestamp_month’,

TARGET_COLNAME => ‘number_of_people_to_postcode’

)

forecasting_model_people_to Step 4: Calling and Interpreting the Forecasting Model

The model can then be used to forecast for any number of periods in the future. The model will perform better for forecasting periods that are closer training dataset, and are less reliable when it is used to forecast for periods further into the future.

The code used to forecast the number of people moving into a postcode every month for 12 months and save it to a table is shown below.

BEGIN

CALL forecasting_model_people_to!FORECAST(FORECASTING_PERIODS => 12);

LET x := SQLID;

CREATE OR REPLACE TABLE forecast_12periods_move_to AS

SELECT * FROM TABLE(RESULT_SCAN(:x));

END;

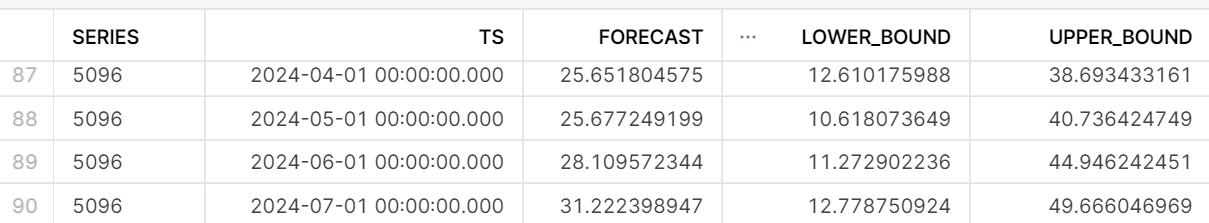

An example of the forecasting model output results are shown below.

The way to interpret the above output would be to say that the number of people forecasted to move into Postcode 5096 (which covers Para Hills, Para Hills West, Gulfview Heights, Adelaide) in April 2024 is approximately 26. The lower bound and upper bound of 12.6 and 38.7 represent the prediction interval. For the April 2024 forecast into Para Hills, the model is 95% confident that the number of people who will move into postcode 5096 in April 2024 is between 12.6 and 38.7. A smaller prediction interval indicates that there is less error in the model and the estimated forecast is more likely to be accurate and vice versa.

The default prediction interval when calling a model in Snowflake is 95%. However, this can be configured when calling the model by adding a prediction interval. The code below shows how to call a model with an 80% prediction interval:

CALL forecasting_model_people_to!FORECAST(FORECASTING_PERIODS => 12, CONFIG_OBJECT => {'prediction_interval': 0.8})

Step 5: Visualising the forecasts using Snowpark and Streamlit

The 12 months results of the forecasting model were then aggregated to produce the total of number people forecasted to move into each postcode across Australia.

The data was then also joined with the Australian Postcode boundaries from the Geography Boundaries & Insights – Australia to allow for geospatial visualisations.

The visualisations were hosted using Streamlit within the Snowflake User Interface.

Streamlit is an open source python library which allows for the creation and sharing of data web applications. Using Streamlit within the Snowflake console allows for the flexibility to securely clean, transform and visualise the data in one place, without the need for any external environments.

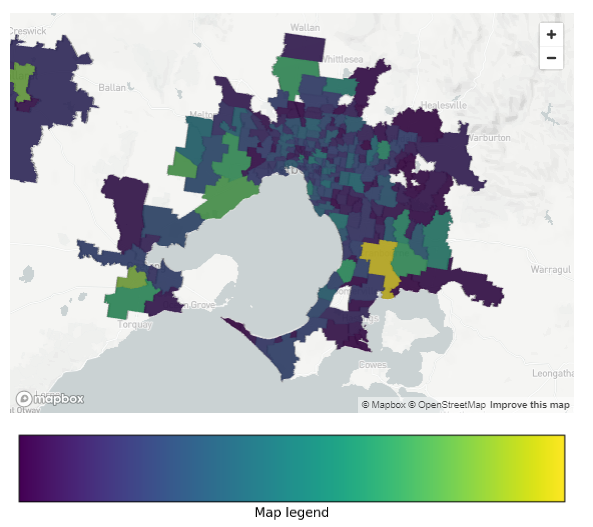

Data Visualisation – Greater Melbourne Region

The visualisation shows the postcodes that people are moving to in the Greater Melbourne region.

The green and yellow regions show the places where high numbers of people are forecasted to move into in the next year, while the purple and blue regions show the regions that are forecasted to have a lower amount of relocation in the next year.

Interestingly, the visualisation shows that places in the outer East including Cranbourne, Clyde North and the outer west including Point Cook and Werribee South. The inner city postcodes which include suburbs such as Fitzroy, Brunswick and North Melbourne are forecasted to have much less migration in the next year.

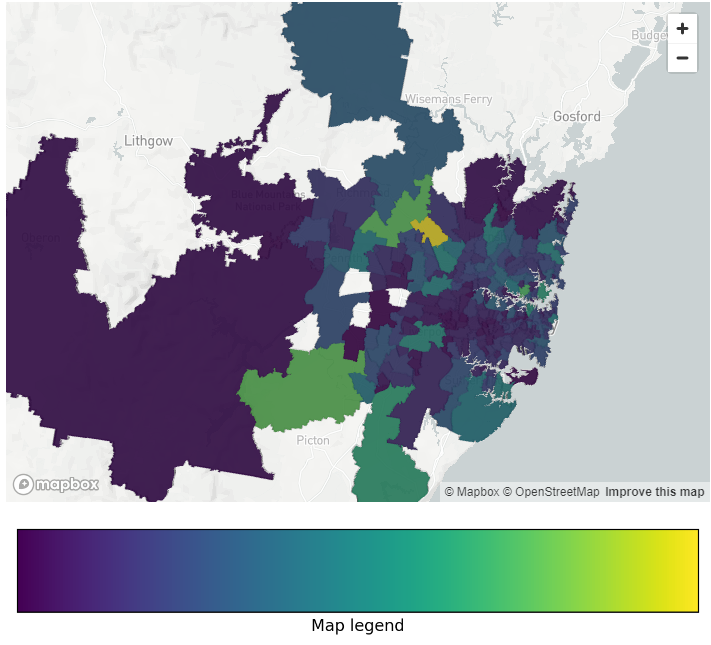

Data Visualisation – Greater Sydney Region

A similar visualisation was done in the Greater Sydney area, where a similar trend was observed.

High levels of migration are forecasted for outer-city areas including Kellyville and North Kellyville and outer-city south west south west areas including Camden and Oran Park.

Like Melbourne, there seems to be less migration forecasted for inner city suburbs including Chippendale, Ultimo and Redfern.

Steps to Create the Visualisations

The following steps were performed to create the geospatial visualisations.

Firstly, the base steps to create a Streamlit App were completed. This includes creating an app and selecting a warehouse to run the queries. This will then create a Snowpark worksheet which allows the creation of a Streamlit app using Python. The Streamlit environment also needs to be set up to allow for the ingestion of packages which requires the CREATE STREAMLIT permission.

The third-party packages were then ingested using the Packages tab at the top of the worksheet. Only packages which are supported by Snowflake are able to be ingested to ensure that the Snowflake platform remains secure. Both Matplotlib and Pydeck were ingested to create these visualisations.

The required packages were then imported to create the Streamlit visualisation

# Import python packages

import streamlit as st

from snowflake.snowpark.context import get_active_session

import json

import pydeck as pdk

import matplotlib.pyplot as plt

import matplotlib as mpl

The Snowpark package was used to connect the worksheet to the table containing the 12 month forecasting data in Snowflake. The postcode geospatial boundaries were also obtained, joined to the forecasting data and converted into a geojson format. This was achieved using the code below:

session = get_active_session()

session.sql ("""SELECT

POA_CODE_2021 as POSTCODE_NAME,

NUMBER_OF_PEOPLE,

ST_ASGEOJSON(geometry) AS geojson

FROM

forecast_12periods_move_to --forecasting model table created in Step 3

INNER JOIN ABS_POA_2021_AUST_GDA2020

ON POA_CODE_2021 = TO_POSTCODE

""").collect()

Each row in the query represents the postcode, the number of people forecasted to move into the postcode in the next year and a geojson representing to geometry of the postcode boundary. Further transformations were done on the result so that each row in the query result was transformed into a dictionary. A key aspect of this transformation was assigning RGB colour code to each postcode depending on the number of people forecasted to migrate to that postcode. A sample of the geojson format is shown below:

map_geojson = {"type": "FeatureCollection",

"features":[

{"type": "Feature",

"properties":

{"postcode": 2000,

'colour':(68, 1, 84)

},

"geometry":{'geometry in geosjon format'}

},

{"type": "Feature",

"properties":

{"postcode": 2000,

'colour':(38, 130, 142)

},

"geometry":{'geometry in geosjon format'}

},

]

}

The base chloropeth map was then set up by assigning the centre and zoom point for the map to render.

pydeck_chloropeth = pdk.Deck(map_style=None,

initial_view_state=pdk.ViewState(

latitude={insert centre latitude},

longitude={insert centre longitude},

zoom={insert zoom level},

),

The geojson created in the step above was then added to the base map to create the chloropeth layer using the code below.

layers=[

pdk.Layer(

"GeoJsonLayer",

map_geojson, #name of the goejson created earlier

opacity=0.8,

stroked=False,

filled=True,

extruded=True,

wireframe=True,

get_fill_color='properties.colour',

get_line_color=[255, 255, 255],

)

],

)

The legend on the map was created using the colorbar function in the matplotlib library.

fig, ax = plt.subplots(figsize=(6, 1), layout='constrained')cmap = mpl.cm.viridis

norm = mpl.colors.Normalize(vmin=0, vmax=1)

fig.colorbar(mpl.cm.ScalarMappable(norm=norm, cmap=cmap),

cax=ax, orientation='horizontal', label='Map legend', ticks=[])

Finally, the following lines of code were used to render both the chloropeth map and the legend on the Streamlit app.

st.pydeck_chart(pydeck_chloropeth)

st.pyplot(fig)

Summary

In this blog, the Australia Post Movers Statistics Marketplace listing was used along with Snowflake's Cortex ML Forecasting function to forecast the postcodes within Australia that have high levels of population movement.

The Streamlit data visualisations revealed that the postcodes that the highest amount of people were forecasted to move into were predominantly located in the outer-city area. Read our following blog to understand where people are moving to.

The rundown above highlights how the Snowflake Data Platform makes it straightforward for businesses to access quality data and market-leading compute, AI and visualisations all on one neat platform.

Australia Post Movers Statistics Data

This dataset contains five years of de-identified, aggregated information on past moves captured by Australia Post's Mail Redirection service.

Access Australia Post mail redirect statistics now to help you develop competitive data-driven strategies.

All rights are reserved, and no content may be republished or reproduced without express written permission from The Proptech Cloud. All content provided is for informational purposes only. While we strive to ensure that the information provided here is both factual and accurate, we make no representations or warranties of any kind about the completeness, accuracy, reliability, suitability, or availability with respect to the blog or the information, products, services, or related graphics contained on the blog for any purpose.

Subscribe to our newsletter

Subscribe to receive the latest blogs and data listings direct to your inbox.