The Three Primary Methods of Real Estate Data Integration

Real estate data is often fragmented across multiple systems. Learn the three key methods of integration to turn scattered data into actionable insights.

Table of Contents

Jump ahead ↓

Why Integrating Real Estate Data is Critical

Real estate data is highly fragmented. Property details often sit in different systems: land title records, council zoning databases, property listing sites, tenancy records and geospatial datasets.

Without a way to connect this information, we end up with a pile of disconnected data points that fail to tell the full story of a property. This is why effective data integration is so important.

Data integration is the key to transforming raw data into actionable insights. It allows real estate professionals, investors and analysts to derive meaningful conclusions—whether it’s tracking ownership, understanding market trends or making informed investment decisions.

We explain the three primary ways to integrate real estate data: geospatial relationships, title matches and address matching. Each has its own strengths and limitations, depending on the nature of the data and the use case.

1. Geospatial Relationships

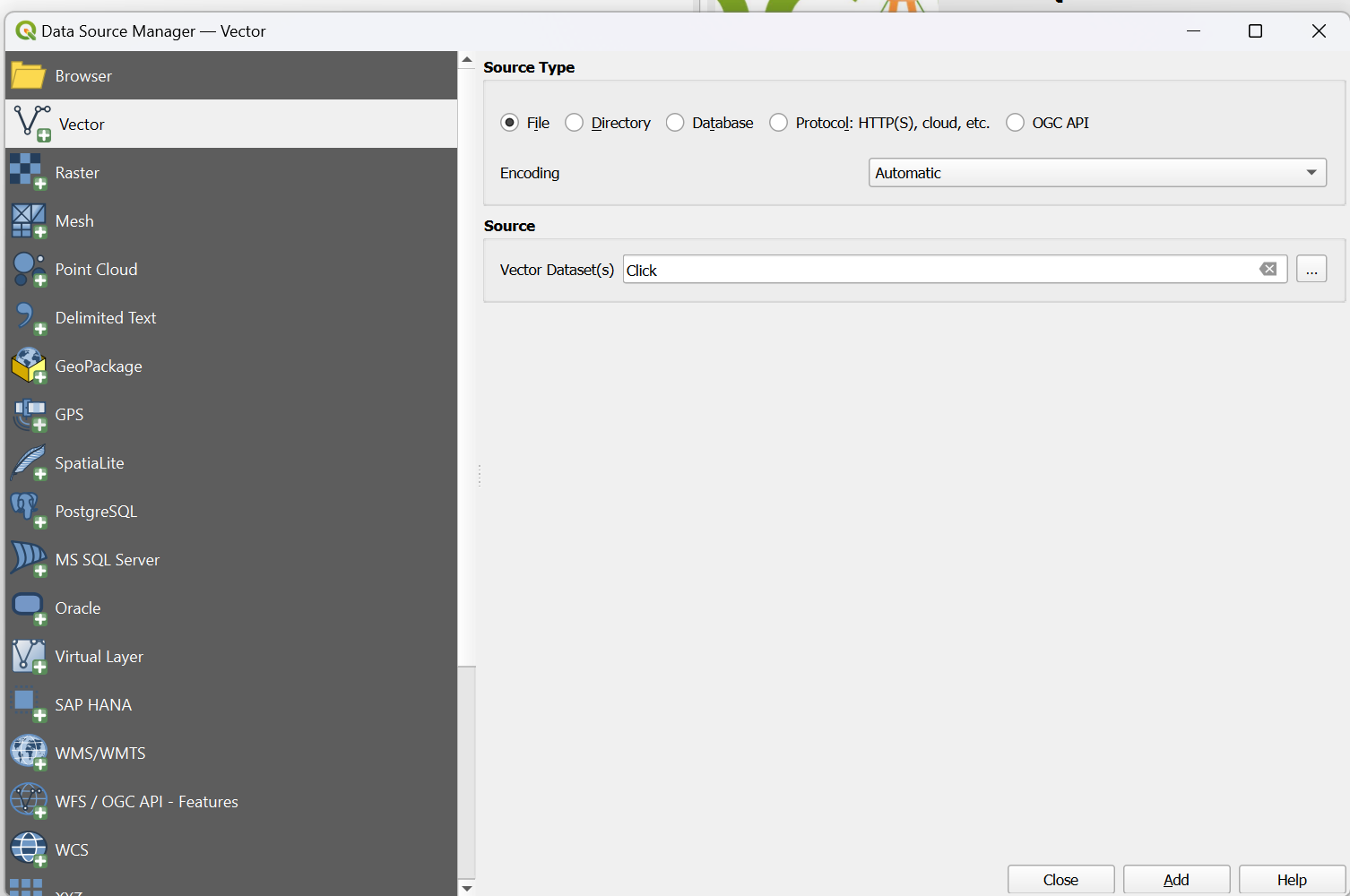

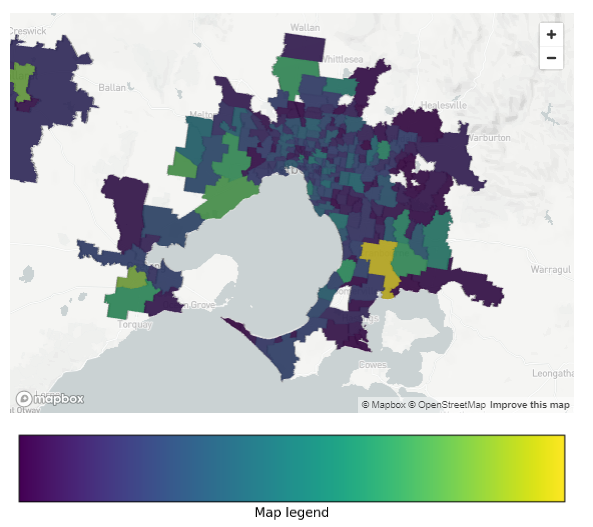

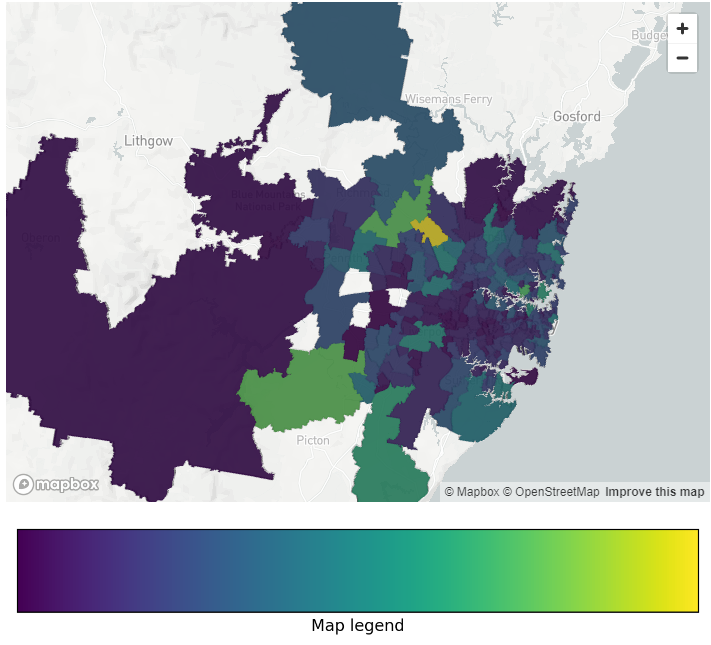

What it is: Geospatial integration links data based on location. By using coordinates (latitude and longitude), data points from different sources can be spatially joined.

How it works: When you overlay different datasets—such as zoning maps, property sales records, and infrastructure plans—you can see relationships that wouldn’t be obvious otherwise. For example, a property’s proximity to schools, flood zones, or transport hubs can be determined through geospatial analysis.

Key advantages: This method is highly accurate because geographic coordinates don’t change. Even if an address is incorrectly recorded, a property’s spatial footprint remains fixed.

Limitations: Geospatial integration requires well-defined and accurate location data. If two datasets use different coordinate systems or resolutions, alignment issues can arise. Additionally, real estate data often has legal dimensions that may not be captured purely through geospatial links.

2. Title Matches

What is it: Title matching connects datasets based on property ownership records. This approach relies on land title numbers, which are unique identifiers assigned by government agencies.

How it works: When a property is bought or sold, title details are updated in official registries. Matching records across datasets using title numbers ensures that ownership details, sales history and encumbrances (such as mortgages or caveats) are linked correctly.

Key advantages: Title numbers are unique, making this method highly reliable for tracking ownership and transactions. It’s essential for legal and financial applications, such as mortgage assessments or due diligence for property acquisitions.

Limitations: Title-based integration struggles with temporal changes. Ownership structures change, subdivisions occur, and title references can be updated. If datasets don’t capture changes in sync, they can become misaligned.

3. Address Matching

What it is: Address matching integrates datasets by linking properties based on their address details.

How it works: Addresses are matched across different data sources using structured comparisons. This can involve simple string matching (e.g., “10 Smith Street” vs “10 Smith St”) or more complex approaches that account for variations in formatting, typos, and missing components. Some systems use reference databases, such as the G-NAF (Geocoded National Address File) in Australia, to standardise addresses.

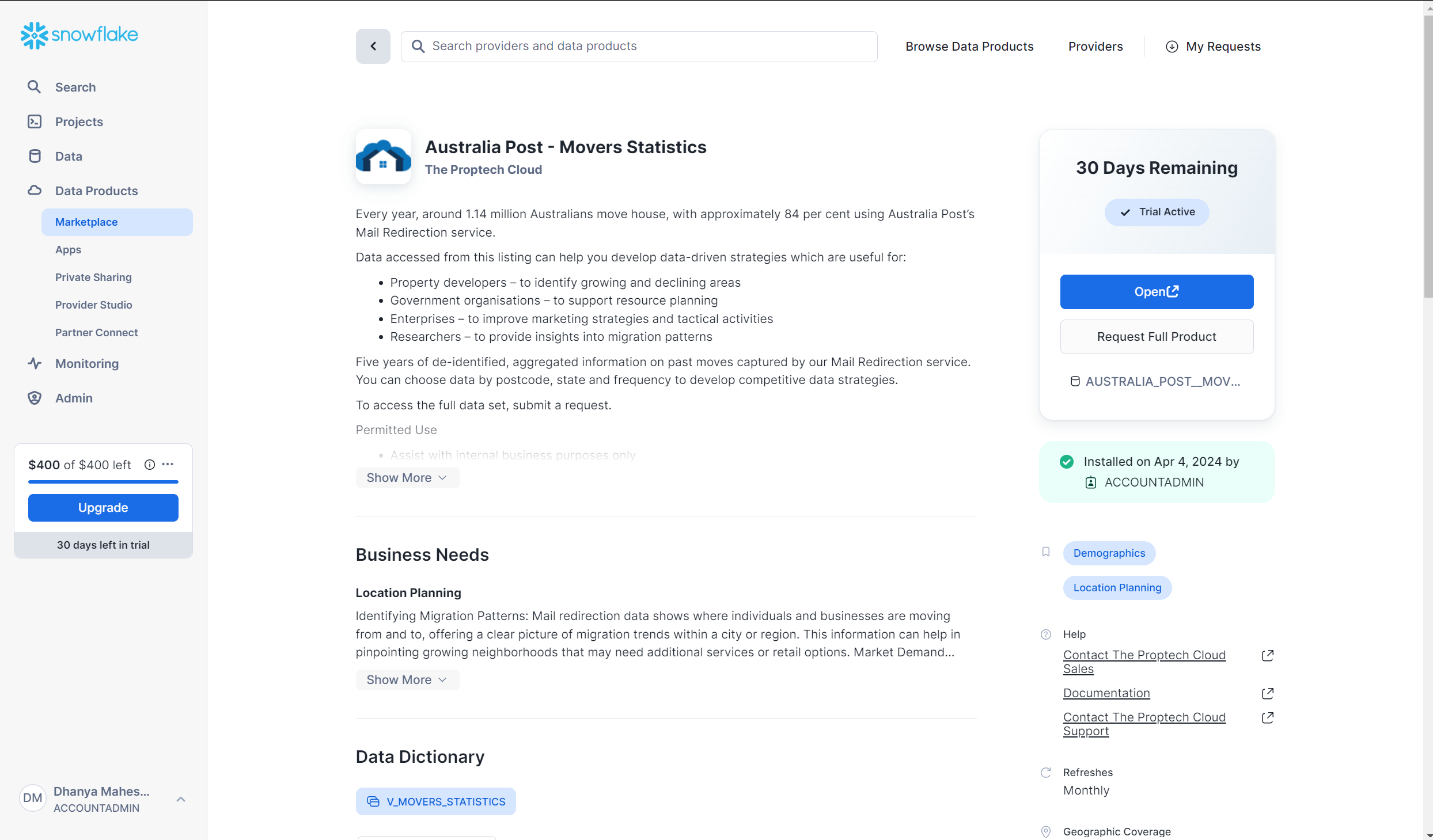

Key advantages: Address matching is often the easiest and most accessible method of integration. It is useful for linking datasets where title information isn’t available, such as real estate listings, valuation reports, or demographic datasets.

Limitations: Address-based integration is prone to inconsistencies. Minor differences in how addresses are recorded can lead to failed matches. Also, properties with multiple units or different access points can create ambiguities. Without standardisation, address matching can result in duplicate or missing records.

When To Use Each Approach

- Use geospatial relationships when integrating datasets based on physical location, such as infrastructure impact studies, zoning analysis or proximity-based valuations.

- Use title matches for legally binding property transactions, ownership tracking and financial due diligence.

- Use address matching when working with customer-facing datasets, listings, or demographic analysis where legal identifiers aren’t available.

In many cases, a combination of these methods provides the most accurate results.

For example, a property data platform might use title matching to ensure ownership accuracy while also using geospatial analysis for insights on location-driven value.

An Additional Consideration: Just-in-Time vs. Precalculated Integration

One important factor when integrating real estate data is whether the integration should be performed on demand (just-in-time) or precalculated.

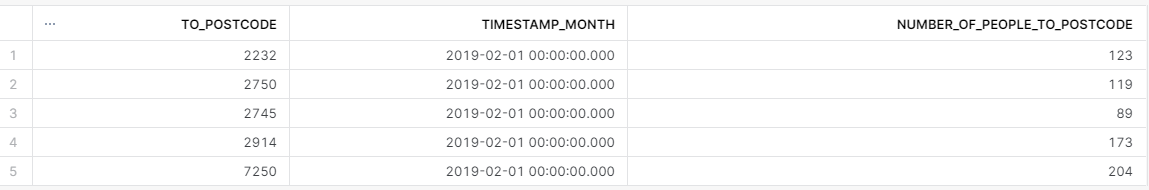

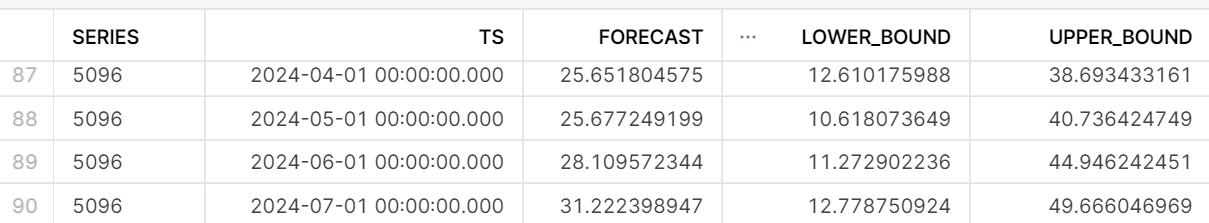

- Just-in-time integration pulls and matches data when a user requests it. This is useful when dealing with frequently changing datasets, such as live property listings or market analysis tools.

- Precalculated integration, on the other hand, processes and stores integrated data ahead of time, making it faster to retrieve but potentially outdated.

Which method is right for your needs? That’s a question we’ll explore in more detail in an upcoming article.

Assessing Your Data Integration Approach

If you’re working with real estate data, it’s worth assessing which integration method best suits your needs. Are you working with ownership records that require absolute accuracy? Are you analysing location-based trends? Or do you need to link addresses across multiple systems?

Each approach has its place, and choosing the right one ensures better insights, fewer mismatches and more reliable decision-making. Fortunately, there are software solutions that specialise in integrating real estate data, as well as consultants who can help design the right approach for your business. If your data integration isn’t working as expected, it may be time to rethink your strategy.